A

Physicist’s Physicist Ponders the Nature of Reality

Edward Witten reflects on

the meaning of dualities in physics and math, emergent space-time, and the

pursuit of a complete description of nature.

November 28 2017

Among the brilliant

theorists cloistered in the quiet woodside campus of the Institute for Advanced

Study in Princeton, New Jersey, Edward Witten stands out as a kind of high

priest. The sole physicist ever to win the Fields Medal, mathematics’ premier

prize, Witten is also known for discovering M-theory, the leading candidate for

a unified physical “theory of everything.” A genius’s genius,

Witten is tall and rectangular, with hazy eyes and an air of being only

one-quarter tuned in to reality until someone draws him back from more abstract

thoughts.

During a visit this fall, I spotted Witten on the

Institute’s central lawn and requested an interview; in his quick, alto voice,

he said he couldn’t promise to be able to answer my questions but would try.

Later, when I passed him on the stone paths, he often didn’t seem to see me.

Physics luminaries since Albert Einstein, who lived

out his days in the same intellectual haven, have sought to unify gravity with

the other forces of nature by finding a more fundamental quantum theory to

replace Einstein’s approximate picture of gravity as curves in the geometry of

space-time. M-theory, which Witten proposed in 1995, could conceivably offer

this deeper description, but only some aspects of the theory are known.

M-theory incorporates within a single mathematical structure all five versions

of string theory, which renders the

elements of nature as minuscule vibrating strings. These five string theories

connect to each other through “dualities,” or mathematical equivalences. Over

the past 30 years, Witten and others have learned that the string theories are

also mathematically dual to quantum field theories — descriptions of particles

moving through electromagnetic and other fields that serve as the language of

the reigning “Standard Model” of particle physics. While he’s best known as a

string theorist, Witten has discovered many new quantum field theories and

explored how all these different descriptions are connected. His physical

insights have led time and again to deep mathematical discoveries.

That’s extremely strange,

that the world is based so much on a mathematical structure that’s so

difficult.

Researchers pore over his work and hope he’ll take

an interest in theirs. But for all his scholarly influence, Witten, who is 66,

does not often broadcast his views on the implications of modern theoretical

discoveries. Even his close colleagues eagerly suggested questions they wanted

me to ask him.

When I arrived at his office at the appointed hour

on a summery Thursday last month, Witten wasn’t there. His door was ajar.

Papers covered his coffee table and desk — not stacks, but floods: text

oriented every which way, some pages close to spilling onto the floor.

(Research papers get lost in the maelstrom as he finishes with them, he later

explained, and every so often he throws the heaps away.) Two girls smiled out

from a framed photo on a shelf; children’s artwork decorated the walls, one

celebrating Grandparents’ Day. When Witten arrived minutes later, we spoke for

an hour and a half about the meaning of dualities in physics and math, the

current prospects of M-theory, what he’s reading, what he’s looking for, and

the nature of reality. The interview has been condensed and edited for

clarity.

Physicists

are talking more than ever lately about dualities, but you’ve been studying

them for decades. Why does the subject interest you?

People keep finding new facets of dualities.

Dualities are interesting because they frequently answer questions that are

otherwise out of reach. For example, you might have spent years pondering a

quantum theory and you understand what happens when the quantum effects are

small, but textbooks don’t tell you what you do if the quantum effects are big;

you’re generally in trouble if you want to know that. Frequently dualities

answer such questions. They give you another description, and the questions you

can answer in one description are different than the questions you can answer

in a different description.

What are some of these

newfound facets of dualities?

It’s open-ended because there are so many different

kinds of dualities. There are dualities between a gauge theory [a theory, such

as a quantum field theory, that respects certain symmetries] and another gauge

theory, or between a string theory for weak coupling [describing strings that

move almost independently from one another] and a string theory for strong

coupling. Then there’s AdS/CFT duality, between a gauge theory and a

gravitational description. That duality was discovered 20 years ago, and it’s amazing to what

extent it’s still fruitful. And that’s largely because around 10 years ago, new

ideas were introduced that rejuvenated it. People had new insights about

entropy in quantum field theory — the whole story about “it from qubit.”

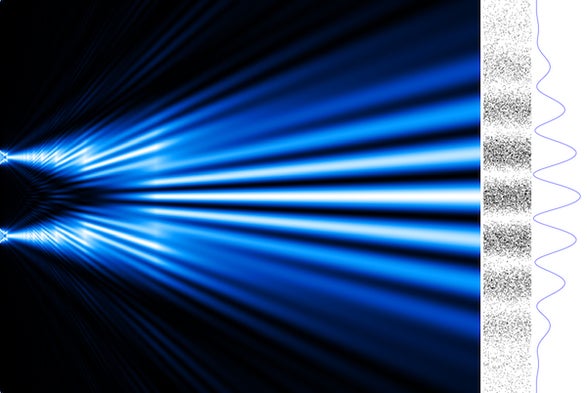

The AdS/CFT

duality connects a theory of gravity in a space-time region called

anti-de Sitter space (which curves differently than our universe) to an

equivalent quantum field theory describing that region’s gravity-free boundary.

Everything there is to know about AdS space — often called the “bulk” since

it’s the higher-dimensional region — is encoded, like in a hologram, in quantum

interactions between particles on the lower-dimensional boundary. Thus, AdS/CFT

gives physicists a “holographic” understanding of the quantum nature of

gravity.

That’s the idea that

space-time and everything in it emerges like a hologram out of information

stored in the entangled quantum states of particles.

Yes. Then there are dualities in math, which can

sometimes be interpreted physically as consequences of dualities between two

quantum field theories. There are so many ways these things are interconnected

that any simple statement I try to make on the fly, as soon as I’ve said it I

realize it didn’t capture the whole reality. You have to imagine a web of

different relationships, where the same physics has different descriptions,

revealing different properties. In the simplest case, there are only two

important descriptions, and that might be enough. If you ask me about a more

complicated example, there might be many, many different ones.

Given this web of

relationships and the issue of how hard it is to characterize all duality, do

you feel that this reflects a lack of understanding of the structure, or is it

that we’re seeing the structure, only it’s very complicated?

I’m not certain what we should hope for.

Traditionally, quantum field theory was constructed by starting with the

classical picture [of a smooth field] and then quantizing it. Now we’ve learned

that there are a lot of things that happen that that description doesn’t do

justice to. And the same quantum theory can come from different classical

theories. Now, Nati Seiberg [a theoretical

physicist who works down the hall] would possibly tell you that he has faith

that there’s a better formulation of quantum field theory that we don’t know

about that would make everything clearer. I’m not sure how much you should

expect that to exist. That would be a dream, but it might be too much to hope

for; I really don’t know.

There’s another curious fact that you might want to

consider, which is that quantum field theory is very central to physics, and

it’s actually also clearly very important for math. But it’s extremely

difficult for mathematicians to study; the way physicists define it is very

hard for mathematicians to follow with a rigorous theory. That’s extremely

strange, that the world is based so much on a mathematical structure that’s so

difficult.

What do you

see as the relationship between math and physics?

I prefer not to give you a cosmic answer but to

comment on where we are now. Physics in quantum field theory and string theory

somehow has a lot of mathematical secrets in it, which we don’t

know how to extract in a systematic way. Physicists are able to come up with

things that surprise the mathematicians. Because it’s hard to describe

mathematically in the known formulation, the things you learn about quantum

field theory you have to learn from physics.

I find it hard to believe there’s a new formulation

that’s universal. I think it’s too much to hope for. I could point to theories

where the standard approach really seems inadequate, so at least for those

classes of quantum field theories, you could hope for a new formulation. But I really

can’t imagine what it would be.

You can’t imagine it at

all?

No, I can’t. Traditionally it was thought that

interacting quantum field theory couldn’t exist above four dimensions, and

there was the interesting fact that that’s the dimension we live in. But one of

the offshoots of the string dualities of the 1990s was that it was discovered

that quantum field theories actually exist in five and six dimensions. And it’s

amazing how much is known about their properties.

If there’s a radically

different dual description of the real world, maybe some things physicists

worry about would be clearer, but the dual description might be one in which

everyday life would be hard to describe.

I’ve heard about the

mysterious (2,0) theory, a quantum field theory describing particles in six

dimensions, which is dual to M-theory describing strings and gravity in

seven-dimensional AdS space. Does this (2,0) theory play an important role in

the web of dualities?

Yes, that’s the pinnacle. In terms of

conventional quantum field theory without gravity, there is nothing quite like

it above six dimensions. From the (2,0) theory’s existence and main

properties, you can deduce an incredible amount about what happens in

lower dimensions. An awful lot of important dualities in four and fewer

dimensions follow from this six-dimensional theory and its

properties. However, whereas what we know about quantum field theory is

normally from quantizing a classical field theory, there’s no reasonable

classical starting point of the (2,0) theory. The (2,0) theory has properties

[such as combinations of symmetries] that sound impossible when you first hear

about them. So you can ask why dualities exist, but you can also ask why is there

a 6-D theory with such and such properties? This seems to me a more fundamental

restatement.

Dualities sometimes make it

hard to maintain a sense of what’s real in the world, given that there are

radically different ways you can describe a single system. How would you

describe what’s real or fundamental?

What aspect of what’s real are you interested in?

What does it mean that we exist? Or how do we fit into our mathematical

descriptions?

The latter.

Well, one thing I’ll tell you is that in general,

when you have dualities, things that are easy to see in one description can be

hard to see in the other description. So you and I, for example, are fairly

simple to describe in the usual approach to physics as developed by Newton and

his successors. But if there’s a radically different dual description of the

real world, maybe some things physicists worry about would be clearer, but the

dual description might be one in which everyday life would be hard to describe.

What would you say about

the prospect of an even more optimistic idea that there could be one single

quantum gravity description that really does help you in every case in the real

world?

Well, unfortunately, even if it’s correct I can’t

guarantee it would help. Part of what makes it difficult to help is that the

description we have now, even though it’s not complete, does explain an awful

lot. And so it’s a little hard to say, even if you had a truly better

description or a more complete description, whether it would help in practice.

Are you speaking of

M-theory?

M-theory is the candidate for the better

description.

You proposed M-theory 22

years ago. What are its prospects today?

Personally, I thought it was extremely clear it

existed 22 years ago, but the level of confidence has got to be much higher

today because AdS/CFT has given us precise definitions, at least in AdS

space-time geometries. I think our understanding of what it is, though, is

still very hazy. AdS/CFT and whatever’s come from it is the main new

perspective compared to 22 years ago, but I think it’s perfectly possible that

AdS/CFT is only one side of a multifaceted story. There might be other equally important facets.

What’s an

example of something else we might need?

Maybe a bulk description of the quantum properties

of space-time itself, rather than a holographic boundary description. There

hasn’t been much progress in a long time in getting a better bulk description.

And I think that might be because the answer is of a different kind than

anything we’re used to. That would be my guess.

Are you willing to

speculate about how it would be different?

I really doubt I can say anything useful. I guess I

suspect that there’s an extra layer of abstractness compared to what we’re used

to. I tend to think that there isn’t a precise quantum description of space-time

— except in the types of situations where we know that there is, such as in AdS

space. I tend to think, otherwise, things are a little bit murkier than an

exact quantum description. But I can’t say anything useful.

The other night I was reading an old essay by the

20th-century Princeton physicist John Wheeler. He was a visionary, certainly.

If you take what he says literally, it’s hopelessly vague. And therefore, if I

had read this essay when it came out 30 years ago, which I may have done, I

would have rejected it as being so vague that you couldn’t work on it, even if

he was on the right track.

You’re referring to Information, Physics, Quantum, Wheeler’s 1989 essay

propounding the idea that the physical universe arises from information, which

he dubbed “it from bit.” Why were you reading it?

I’m trying to learn about what people are trying to

say with the phrase “it from qubit.” Wheeler talked about “it from bit,” but

you have to remember that this essay was written probably before the term

“qubit” was coined and certainly before it was in wide currency. Reading it, I

really think he was talking about qubits, not bits, so “it from qubit” is

actually just a modern translation.

I tend to assume that

space-time and everything in it are in some sense emergent.

Don’t expect me to be able to tell you anything

useful about it — about whether he was right. When I was a beginning grad

student, they had a series of lectures by faculty members to the new students

about theoretical research, and one of the people who gave such a lecture was

Wheeler. He drew a picture on the blackboard of

the universe visualized as an eye looking at itself. I had no idea what he was

talking about. It’s obvious to me in hindsight that he was explaining what it

meant to talk about quantum mechanics when the observer is part of the quantum

system. I imagine there is something we don’t understand about that.

Observing a quantum system

irreversibly changes it, creating a distinction between past and future. So the

observer issue seems possibly related to the question of time, which we also

don’t understand. With the AdS/CFT duality, we’ve learned that new spatial

dimensions can pop up like a hologram from quantum information on the boundary.

Do you think time is also emergent — that it arises from a timeless complete

description?

I tend to assume that space-time and everything in

it are in some sense emergent. By the way, you’ll certainly find that that’s

what Wheeler expected in his essay. As you’ll read, he thought the continuum

was wrong in both physics and math. He did not think one’s microscopic

description of space-time should use a continuum of any kind — neither a

continuum of space nor a continuum of time, nor even a continuum of real

numbers. On the space and time, I’m sympathetic to that. On the real numbers,

I’ve got to plead ignorance or agnosticism. It is something I wonder about, but

I’ve tried to imagine what it could mean to not use the continuum of real

numbers, and the one logician I tried discussing it with didn’t help me.

Do you consider Wheeler a

hero?

I wouldn’t call him a hero, necessarily, no. Really

I just became curious what he meant by “it from bit,” and what he was saying.

He definitely had visionary ideas, but they were too far ahead of their

time. I think I was more patient in reading a vague but inspirational essay

than I might have been 20 years ago. He’s also got roughly 100

interesting-sounding references in that essay. If you decided to read them all,

you’d have to spend weeks doing it. I might decide to look at a few of them.

Why do you

have more patience for such things now?

I think when I was younger I always thought the

next thing I did might be the best thing in my life. But at this point in life

I’m less persuaded of that. If I waste a little time reading somebody’s essay,

it doesn’t seem that bad.

Do you ever take your mind

off physics and math?

My favorite pastime is tennis. I am a very average

but enthusiastic tennis player.

In contrast to Wheeler, it

seems like your working style is to come to the insights through the

calculations, rather than chasing a vague vision.

In my career I’ve only been able to take small

jumps. Relatively small jumps. What Wheeler was talking about was an enormous

jump. And he does say at the beginning of the essay that he has no idea if this

will take 10, 100 or 1,000 years.

And he was talking about

explaining how physics arises from information.

Yes. The way he phrases it is broader: He wants to

explain the meaning of existence. That was actually why I thought you were

asking if I wanted to explain the meaning of existence.

I see. Does he have any

hypotheses?

No. He only talks about things you shouldn’t do and

things you should do in trying to arrive at a more fundamental description of

physics.

Do you have any ideas about

the meaning of existence?

No. [Laughs.]

Correction: This article

was updated on Nov. 29, 2017, to clarify that M-theory is the leading candidate

for a unified theory of everything. Other ideas have been proposed that also

claim to unify the fundamental forces.

RELATED

Note:The original have photos from Jean Sweep for Quanta Magazine